There is little doubt now that driverless cars and trucks will be a big part of the future on our roads. Everyone from the car manufactures to Google to Uber and more are developing and testing the technology. In some ways, the tech has worked very well. In other, more tragic ways, it has not. Earlier this year, a self-driving Uber vehicle struck and killed a pedestrian in Arizona. In that case, a woman was crossing a darkened street.

But going deeper than the technical questions in play to design and execute these self-driving machines are the moral questions, namely, if an accident is unavoidable, who should pay the ultimate price with their life?

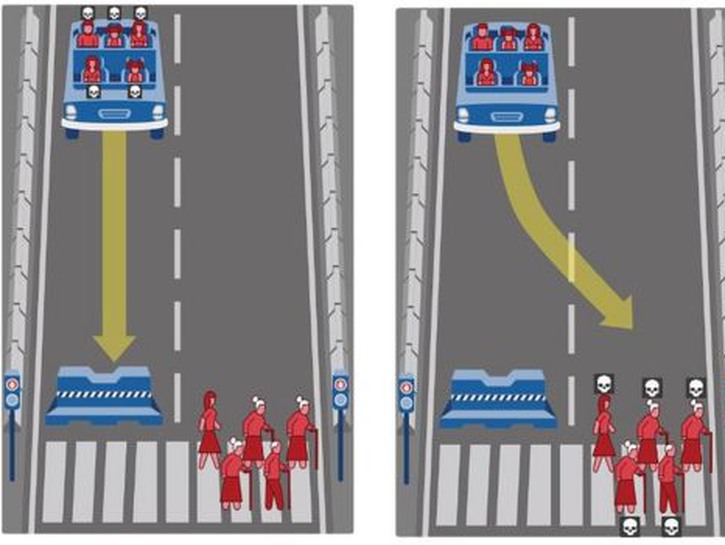

What can we teach cars with artificial intelligence (AI) about decisions where the outcome is bad no matter what happens? For example, in a scenario where there are two choices in an accident, a self-driving car must decide if it’s better to hit a family of four crossing the street, or in turning out of the way, it will fall off a bridge, killing the family of four riding in the vehicle.

What decision does that car make?

riopatuca/Shutterstock.com

A group of researchers at the Massachusetts Institute of Technology have set up an experiment to find out what people think about this. They call it the “Moral Machine.”

The basics of the experiment are that people are polled on a number of scenarios involving either saving the people in the self-driving car or pedestrians crossing the street. In the various scenarios, the identities of the pedestrians changed, which included:

- a successful business person

- a known criminal

- a group of elderly people

- a herd of cows

- pedestrians who were crossing the road when they were told to wait

The experiment is now four years old and the first set of results has recently been released.

So, how did people answer?

Source: MIT MEDIA LAB

Well, some of the answers are what you expect. Human lives were valued over animal lives, for example. The number of possible injuries or death also played a large factor – the decision that causes fewer deaths being the better decision.

There were a few results that were a little surprising, such as smaller trends of saving richer people over poorer people and females over males.

There was also the decision to save pedestrians over those in the car, which to us is the most interesting. The car making the decision might be expected to prioritize its owners’ safety OVER those outside the vehicle.

The researchers at MIT hope that their study will go a long way towards the future of decisions in autonomous vehicles and help to avoid terrible crashes like the one in Arizona in March (included below).

Warning: The video below is graphic.

- What Happened to the Cast of ‘Silver Spoons’? Where the ’80s Stars Are Now

- Mark Wahlberg & Burt Reynolds Clashed on ‘Boogie Nights’ Set

- Sally Field Net Worth: How Much Did the ‘Gidget’ Star Make?

- What Happened to the Original ‘Charlie’s Angels’ Cast?

- Barbara Eden Net Worth: How Much Did the ‘I Dream of Jeannie’ Star Earn?